The Rules-Based Personalization Trap

Most enterprise personalization systems operate like sophisticated if-then machines: If customer segment = premium AND product category = electronics, THEN show premium electronics banner. This approach worked in 2015. In 2025, it’s a competitive liability.

The fundamental limitation isn’t complexity—it’s latency and adaptability. Rules-based systems make decisions based on predetermined logic, while real-time ML systems make decisions based on patterns discovered milliseconds ago. The difference in business impact is measurable and significant.

Consider the architectural contrast:

Rules-Based System:

- Decision latency: 50-200ms

- Data freshness: Hours to days

- Personalization depth: 10-50 variables

- Adaptation cycle: Quarterly updates

Real-Time ML System:

- Decision latency: 5-20ms

- Data freshness: Sub-second

- Personalization depth: 1,000+ variables

- Adaptation cycle: Continuous learning

The Real-Time ML Architecture Framework

Leading organizations are implementing what we term Streaming Intelligence Architecture—a technical framework that processes behavioral signals, contextual data, and business rules in real-time to deliver personalized experiences that adapt within individual user sessions.

Core Components:

Event Stream Processing Layer Real-time data ingestion processing millions of events per second using Apache Kafka or AWS Kinesis, with sub-10ms latency requirements for critical personalization signals.

Feature Store Infrastructure Centralized feature engineering and storage enabling consistent ML feature access across training and inference pipelines, reducing model deployment time from weeks to hours.

ML Inference Engine Containerized model serving infrastructure supporting A/B testing of multiple models simultaneously, with automatic failover to ensure 99.9% availability.

Decision API Layer High-performance API layer that combines ML predictions with business rules, real-time inventory data, and personalization constraints to deliver final recommendations.

Strategic Implementation Framework

Phase 1: Foundation (Months 1-6) Implement event streaming infrastructure and feature store. Target: Single-digit millisecond event processing with 99.5% uptime.

Phase 2: Intelligence (Months 7-12) Deploy initial ML models focusing on high-impact use cases: product recommendations, content personalization, or dynamic pricing. Target: 15-25% improvement in key conversion metrics.

Phase 3: Optimization (Months 13-18) Implement advanced techniques including multi-armed bandits, contextual embeddings, and real-time model retraining. Target: 30-50% improvement over baseline systems.

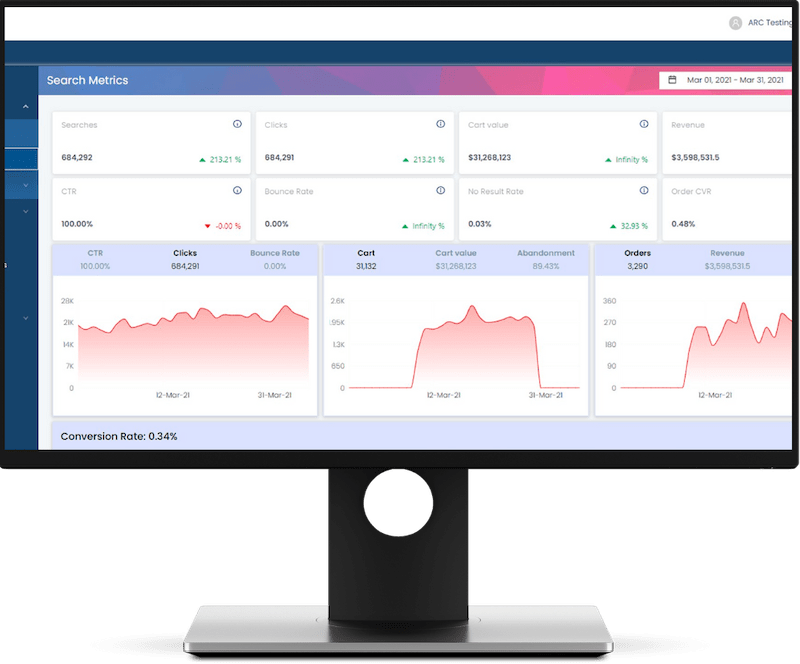

Measuring Hyperpersonalization ROI

The business case for real-time ML architecture typically demonstrates positive ROI within 12-18 months:

- Revenue Impact: 10-30% increase in conversion rates

- Operational Efficiency: 40-60% reduction in manual campaign management

- Customer Lifetime Value: 15-25% improvement through better experience matching

- Competitive Moat: Increasingly difficult for competitors to replicate sophisticated ML-driven experiences